SongGLM: Lyric-to-Melody Generation with 2D Alignment Encoding and Multi-Task Pre-Training

Authors

- Jiaxing Yu (Zhejiang University) yujx@zju.edu.cn

- Xinda Wu (Zhejiang University) wuxinda@zju.edu.cn

- Yunfei Xu (OPPO) xuyunfei@oppo.com

- Tieyao Zhang (Zhejiang University) kreutzer0421@zju.edu.cn

- Songruoyao Wu (Zhejiang University) wsry@zju.edu.cn

- Le Ma (Zhejiang University) maller@zju.edu.cn

- Kejun Zhang* (Zhejiang University) zhangkejun@zju.edu.cn

* Corresponding Author

Abstract

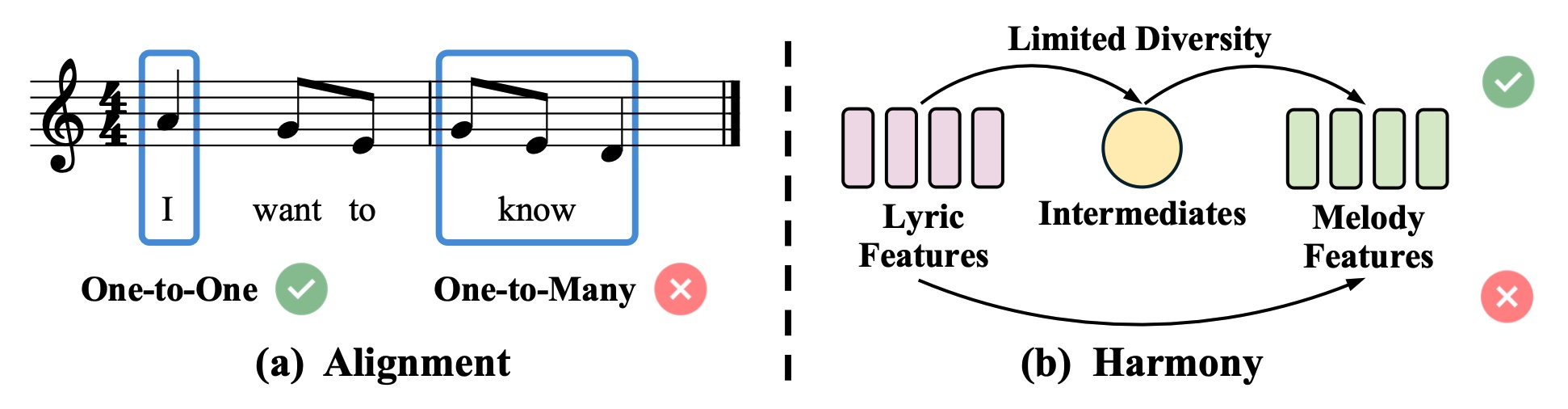

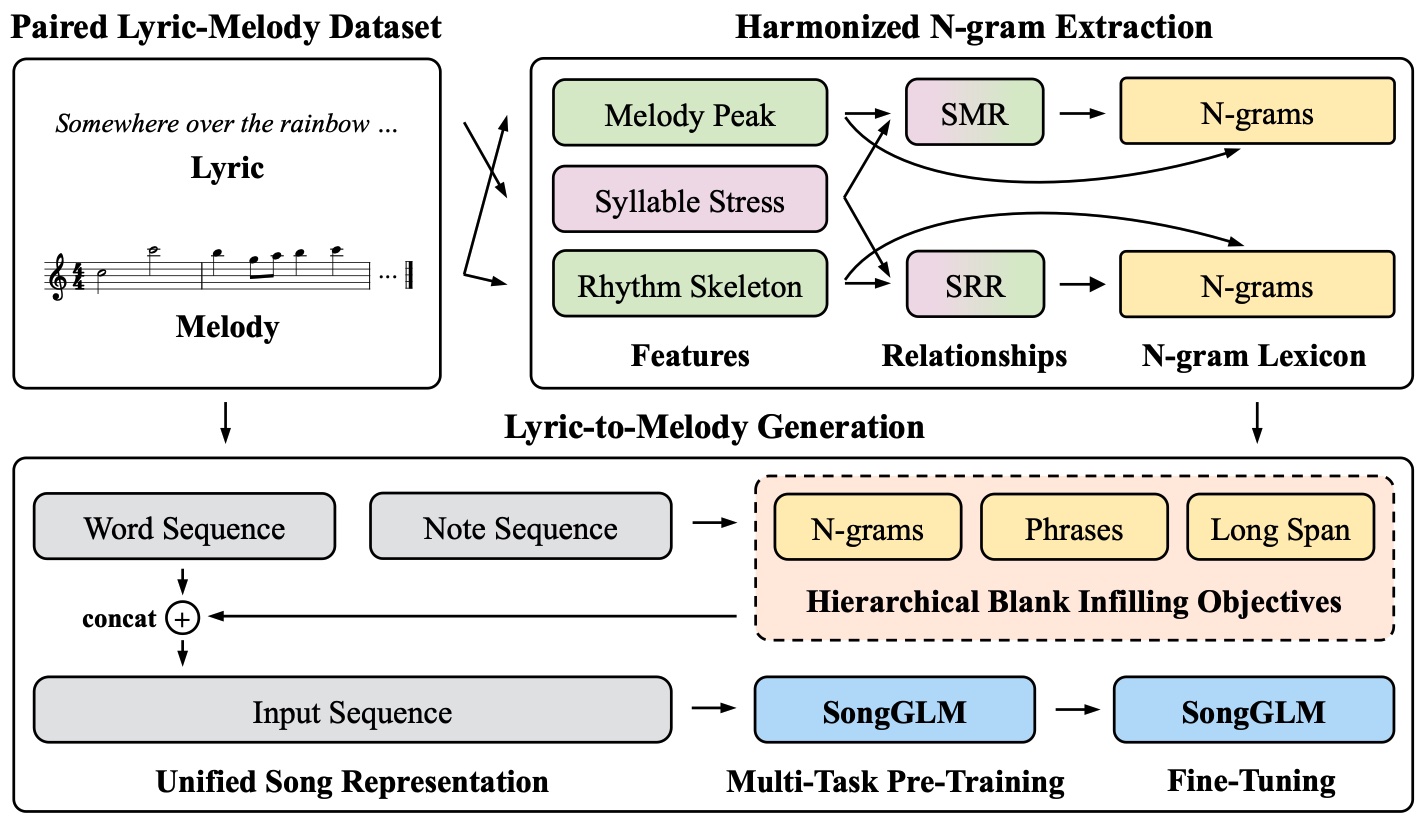

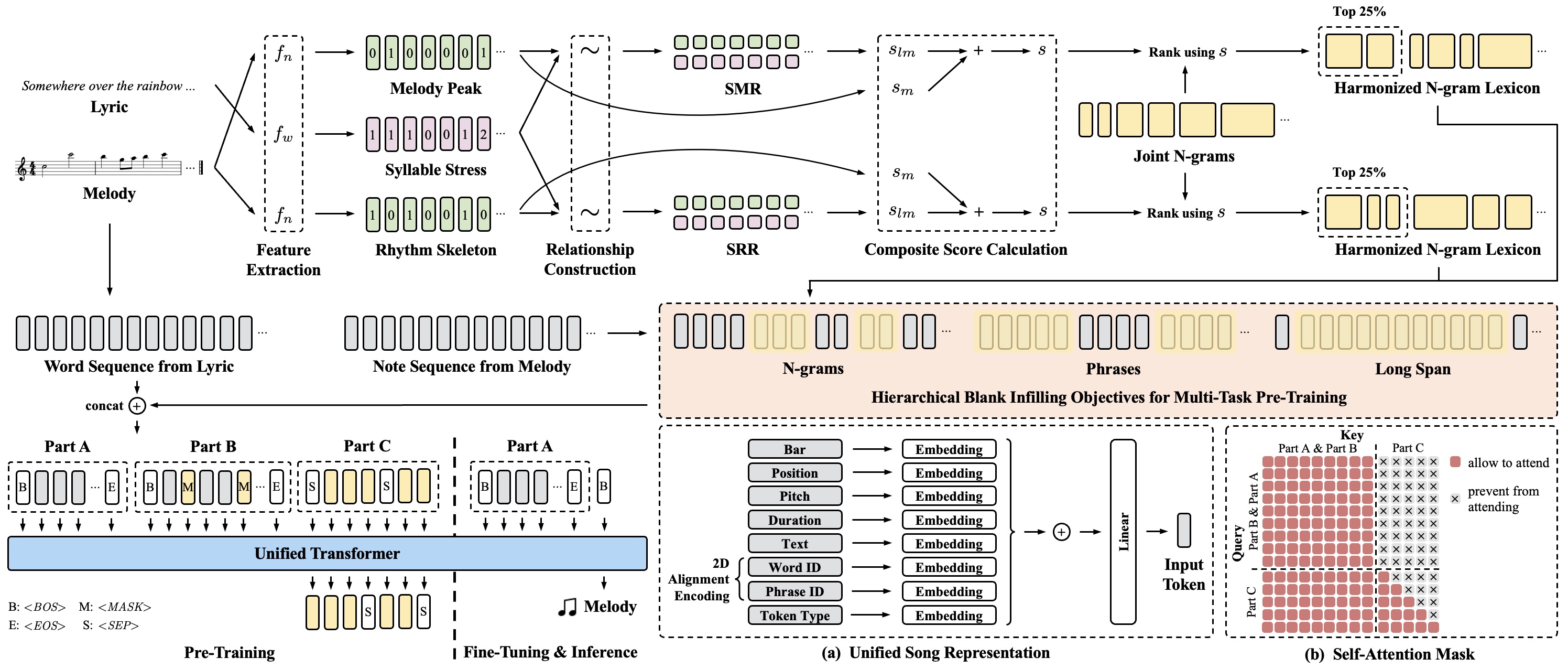

Lyric-to-melody generation aims to automatically create melodies based on given lyrics, requiring the capture of complex and subtle correlations between them. However, previous works usually suffer from two main challenges: 1) lyric-melody alignment modeling, which is often simplified to one-syllable/word-to-one-note alignment, while others have the problem of low alignment accuracy; 2) lyric-melody harmony modeling, which usually relies heavily on intermediates or strict rules, limiting model's capabilities and generative diversity. In this paper, we propose SongGLM, a lyric-to-melody generation system that leverages 2D alignment encoding and multi-task pre-training based on the General Language Model (GLM) to guarantee the alignment and harmony between lyrics and melodies. Specifically, 1) we introduce a unified symbolic song representation for lyrics and melodies with word-level and phrase-level (2D) alignment encoding to capture the lyric-melody alignment; 2) we design a multi-task pre-training framework with hierarchical blank infilling objectives (n-gram, phrase, and long span), and incorporate lyric-melody relationships into the extraction of harmonized n-grams to ensure the lyric-melody harmony. We also construct a large-scale lyric-melody paired dataset comprising over 200,000 English song pieces for pre-training and fine-tuning. The objective and subjective results indicate that SongGLM can generate melodies from lyrics with significant improvements in both alignment and harmony, outperforming all the previous baseline methods.

Lyric-to-Melody Generation Challenges

SongGLM Overview

Given the paired lyric-melody dataset, we first establish two relationships between lyrics and melodies based on their representative features, and incorporate these relationships into n-gram extraction to select the most harmonized n-grams. Then, we introduce a unified symbolic song representation with 2D alignment encoding and adopt a multi-task pre-training framework that employs hierarchical blank infilling objectives for lyric-to-melody generation.

Detailed Framework

Lyric-Melody Dataset

| Public Corpus | Website | Raw | Processed |

| NES |

https://www.kaggle.com/datasets/imsparsh/nes-mdb-dataset

https://github.com/chrisdonahue/nesmdb |

|

|

| POP909 | https://github.com/music-x-lab/POP909-Dataset |

|

|

| MTCL | https://www.liederenbank.nl/mtc |

|

|

| Wikifonia |

http://www.wikifonia.org

http://www.synthzone.com/files/Wikifonia/Wikifonia.zip |

|

|

| Session | https://thesession.org |

|

|

| LMD | https://colinraffel.com/projects/lmd |

|

|

| SymphonyNet | https://symphonynet.github.io |

|

|

| MetaMIDI | https://zenodo.org/record/5142664 |

|

|

| Web Collections | Website | Raw | Processed |

| MuseScore |

https://musescore.org

https://github.com/Xmader/musescore-dataset |

|

|

| Hooktheory |

https://www.hooktheory.com

https://github.com/wayne391/lead-sheet-dataset |

|

|

| BitMidi | https://bitmidi.com |

|

|

| FreeMidi |

https://freemidi.org

https://github.com/josephding23/Free-Midi-Library |

|

|

| KernScores | http://kern.ccarh.org |

|

|

| Kunstderfuge | https://www.kunstderfuge.com |

|

|

| ABC Notation | https://abcnotation.com |

|

|